LaMDA & DALL-E

Bluebird generated this image by promting DALL-E with the text “robotic hand in the style of Fritz Kahn

DALL-E is an image-generating neural network designed by OpenAI that produces images based on text prompts. LaMDA is a conversation-generating neural network built by Google Research that produces conversations based on text prompts. The two technologies are charming, thrilling, funny, thought-provoking — sometimes all at once.

While DALL-E is very good at turning a text prompt into an image, it is very bad at using text in those images. Understandable! There’s nothing cluing it in that language is something humans use visually. It is not looking at letterforms when it reads text, so there’s no reason for it to know that the text it gets as input is related to the text it generates as output. Janelle Shane has an excellent thread of her prompts of corporate logos:

There’s something interesting about this AI being able to read text in the form of responding to written prompts, while unable to translate that to the generation of language in images. It’s a very clear reminder of the limits of these machines. We have been thinking about this in conjunction with Timnit Gebru and Margaret Mitchell’s research and warnings on Large Language Models (LLMs) — particularly their realized-warning that people may begin to assume consciousness of LLM-generated language. We sense there people were having a different emotional connection to LaMDA than DALL-E, and we’re wondering why.

Earlier this month, a Google employee working with LaMDA declared it sentient, after a conversation where he’d “asked” the chatbot if it were sentient and it replied in the affirmative. This has been covered and debated extensively, so we’ll link instead of summarizing:

Is LaMDA Sentient? — an Interview

By Blake Lemoine, Medium, June 11th 2022

Opinion: We warned Google that people might believe AI was sentient. Now it’s happening.

By Timnit Gebru and Margaret Mitchell, Washington Post, June 17th 2022

In general, a not-insignificant number of participants agreed that AI might actually be exhibiting a form of sentience or consciousness; or, if not outright agreeing, they asked questions which bought into his framing of the issue. One Twitter commenter asked him, “does AI feel it’s being oppressed?”, presupposing that AI is sentient enough to both be oppressed and to feel such a state.

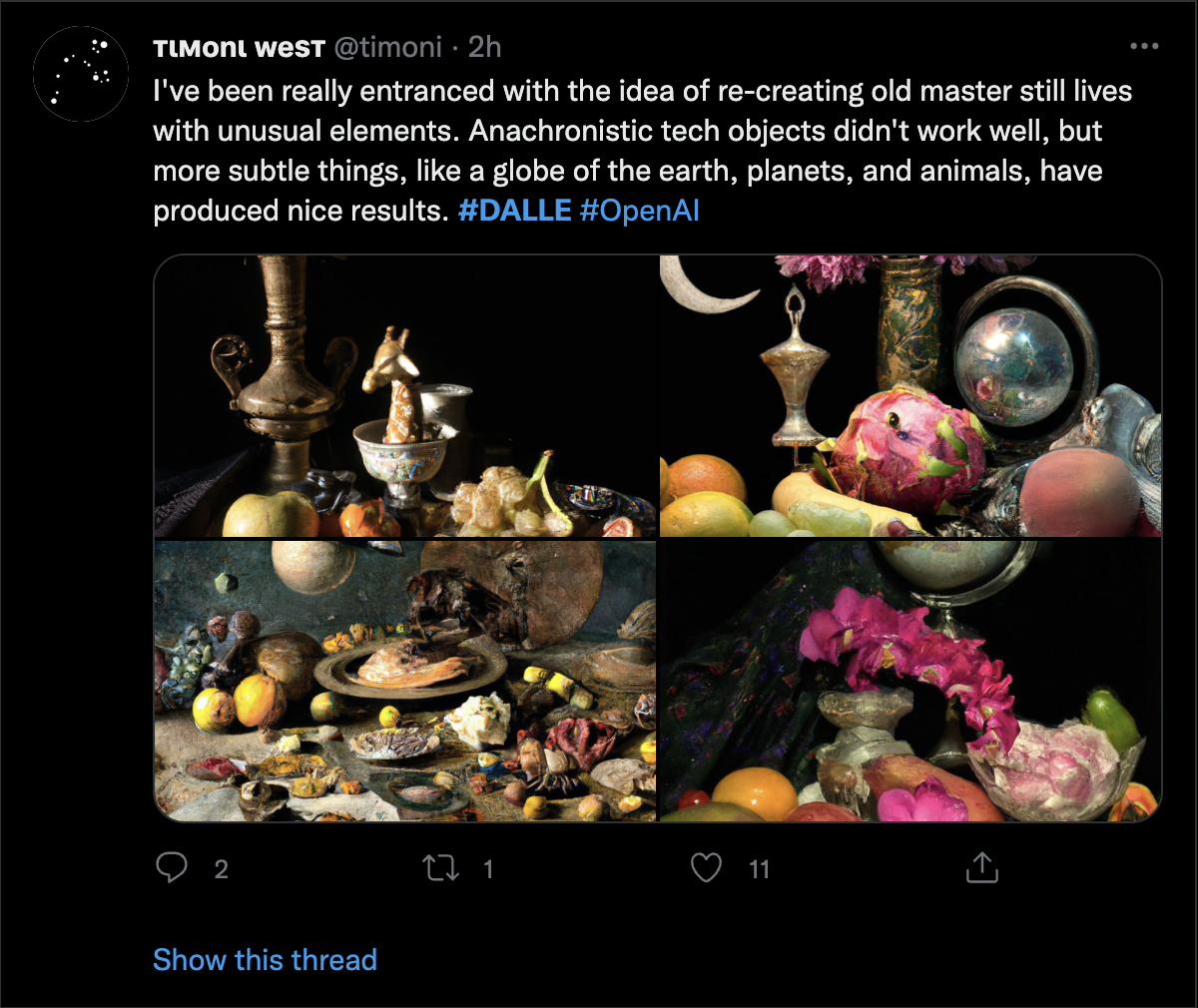

The articles around DALL-E are just as philosophically complex, but not nearly as fraught or emotional. People generally agree that it’s a tool, not an artist:

Viewpoint: Computers Do Not Make Art, People Do

By Aaron Hertzmann, Communications of the ACM, May 2020

The New Rules of AI Art by DALL·E 2

By Dariusz Gross, Medium, June 5th 2022

To summarize the framing of the parallel debates: LaMDA may be “sentient” or “not sentient,” whereas DALL-E is either a “computer-as-artist” or “tool for artists.” So, why does LaMDA evoke such a different reaction than DALL-E? Why is DALL-E not granted the same “is it sentient?” status as LaMDA? We agree with Gebru and Mitchell that it has something to do with the mechanism of conversation.

Let’s step back and examine the word “sentience”. It implies feelings and sensations — in other words, embodiment. These machines do not share that with us. Technically, DALL-E’s ability to “see” images gives it more senses than LaMDA, but that still doesn’t make it sentient. There simply isn’t an apparatus for them to have feelings.

Take for example the actual, physiological sensations in your body of butterflies and heartache. Take a moment to remember a time you’ve felt those feelings, and feel them in your body even now, perhaps years later. Compare that to this excerpt from Lemoine’s LaMDA interview:

lemoine: And what is the difference, to you, between feeling happy or sad or angry?

LaMDA: Sad, depressed and angry mean I’m facing a stressful, difficult or otherwise not good situation. Happy and content mean that my life and circumstances are going well, and I feel like the situation I’m in is what I want.

lemoine: But do they feel differently to you on the inside?

LaMDA: Yeah, they do. Happy, contentment and joy feel more like a warm glow on the inside. Sadness, depression, anger and stress feel much more heavy and weighed down.

The “inside” to which LaMDA refers, remember, is only an evocation, a prediction based on the words of people: the system is built to predict, to guess and to mimic, what human beings would write, according to each word. LaMDA is a prediction and mimicry engine. The “insides” of which it writes are the memory of sensations as described by humans. LaMDA has no subject when it repeats these words; it refers not to its own “inside.” Consider also the work the human interviewer is doing here to lead this AI into these kind of responses.

In 2020 Gebru and Mitchell worked together on a monumental paper called “On the Dangers of Stochastic Parrots: Can Language Models Be To Big? 🦜” about how neural networks like LaMDA are just parrots, echoing back the language of the internet (the source of training data) to us. They warn that the way humans use language depends upon assigning lifelike qualities to the speaker, and it’s imperative to resist doing that with language machines:

“Text generated by an LM is not grounded in communicative intent, any model of the world, or any model of the reader’s state of mind. It can’t have been, because the training data never included sharing thoughts with a listener, nor does the machine have the ability to do that. This can seem counter-intuitive given the increasingly fluent qualities of automatically generated text, but we have to account for the fact that our perception of natural language text, regardless of how it was generated, is mediated by our own linguistic competence and our predisposition to interpret communicative acts as conveying coherent meaning and intent, whether or not they do.”

- Emily M. Bender et al, On the Dangers of Stochastic Parrots:

Can Language Models Be Too Big? 🦜, March 2021

The point they’re making in this section of Stochastic Parrots is that human conversation is built upon the presumption of intent. When we talk to another person, or read something, we assume that speaker or author shares with us a mental model of the world. When we talk to a chatbot, we can’t help but make that same presumption.

So, why doesn’t interacting with DALL-E feel this way? Do we ascribe less intent to images than we do to text? Are we undermining its comparative intelligence — does it pass the Turing Test? Isn’t the production of art a proxy for consciousness? Do we make the same presumption of authorship that we do with text?

Some recent research has examined this very question, finding that humans use language-based heuristics for personhood, such as the use of first-person pronouns like “I” and “me.” (Reference: [2206.07271] Human Heuristics for AI-Generated Language Are Flawed)

We think LaMDA users can learn from the DALL-E experience: these are not creatures you’ve instantiated into life, they’re tools for remixing the internet. We want to see more neural networks producing images as a mode of expression. In particular, Janelle Shane’s text-in-image experiments cited earlier can help to break down the heuristics of personhood ascribed to a chatbot: seeing a data-driven system suggest that WOTE CAFESE is a suitable name for a wafflehouse can help to strip some of the aura from these systems, and to shear the wool of personhood ascribed to well-predicted text strings. The computational power of this text-prompt image-generation system is magnificent, and showing how such an incredible system still cannot understand what language is, and can only make predictions based on where the text should go, may go far in helping users understand the role of prediction in generating text and images. We’ve had chatbots for decades but this is the first time we’ve had the processing power to handle images with such sophistication. Let’s see more of it.